In the Education & Individual Training (E&IT) domain, Continuous Improvement Process (CIP) is not an occasional activity, but it is a structured, continuous, complex, and evidence-based process. Education and Training Facilities (ETFs), whether they deliver residential courses, distributed learning, specialized training, or mission-focused education programmes, must constantly refine their Quality Management Systems (QMS) to meet evolving NATO E&T requirements, student/graduates needs, and organizational/goals objectives.

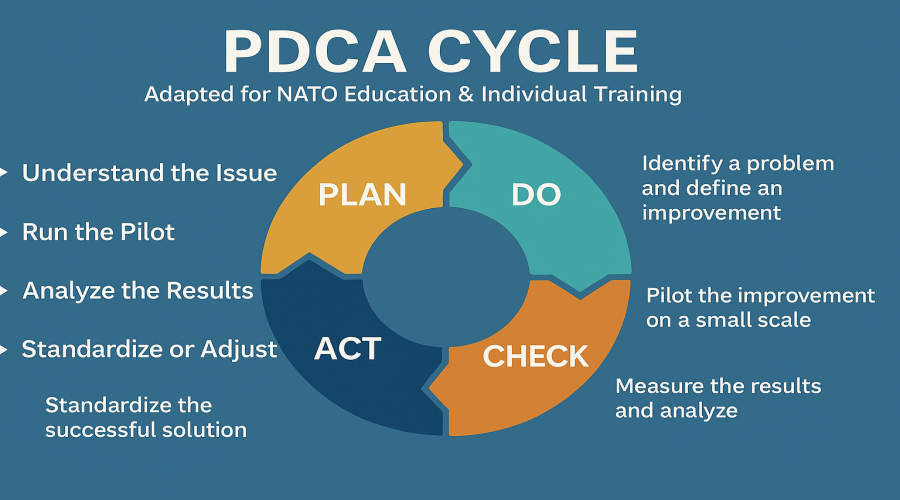

One of the most effective tools for driving this continuous improvement is the PDCA Cycle. A simple, repeatable framework that has shaped modern quality management for decades. Also known as the Deming Cycle or Shewhart Cycle, PDCA provides a disciplined method to analyze issues, test improvements, and standardize what works—ensuring that decisions rely on data, not assumptions.

This guide explains how PDCA fits directly into NATO QA practices, how ETFs can use it at institutional and course levels, and provides practical examples from the E&IT environment perspective.

What Is the PDCA Cycle?

The PDCA Cycle is a four-stage iterative model used to continuously enhance processes, performance, and learning outcomes. It encourages teams to plan improvements thoroughly, test ideas on a manageable scale, analyze results, and institutionalize successful solutions. The stages are:

✅ Plan – Identify a problem, root causes, and an improvement hypothesis.

✅ Do – Pilot the improvement on a limited scale.

✅ Check – Measure the results against expectations.

✅ Act – Standardize the successful solution or refine and repeat.

In an ETF context, PDCA helps optimize everything from training delivery and course design to instructor development, student support systems, and internal QMS documentation.

The Four PDCA Stages Explained (E&IT/ETF context)

1. PLAN – Understand the issue and design an improvement

This phase lays the foundation. Before taking action, the ETF QA Team, Course Director, or QA Manager must clearly understand the issue and define what “success” looks like.

Key questions for an ETF:

- What specific training challenge are we trying to solve?

- What evidence do we have (AQARs, student surveys, instructor feedback, performance data)?

- What NATO Quality Standards are affected?

- What is the measurable objective of the improvement?

Tools commonly used in ETFs:

- Training needs analysis (TNA)

- Diagrams for identifying root causes (e.g., “low learning retention”)

- Charts to prioritize recurring issues (e.g., assessment errors)

- Process mapping of course delivery or student onboarding

- Diagrams for organizational-related processes.

A clear plan ensures the ETF QA team defines the issue correctly and identifies the right corrective or preventive action.

2. DO – Run the pilot/trial

This is where the improvement is tested and not implemented across the ETF, but piloted with minimum risk.

Typical “Do” examples inside ETFs:

- Testing an improved assessment rubric on one iteration of a course.

- Running a revised instructor preparation checklist with a single instructor team.

- Piloting a new online pre-course module with one student group.

- Trialing an updated after-action review (AAR) format after one practical exercise.

During this stage, the team should:

- Document what they observed.

- Capture any unexpected issues.

- Collect data and feedback for analysis.

3. CHECK – Analyze the pilot/trial results

In the check phase, the ETF evaluates the pilot’s effectiveness using measurable evidence (metrics).

Key questions:

- Did the change address the problem?

- What do student evaluations, instructor reports, or KPIs show?

- Did the pilot reduce errors, delays, or frustrations?

- What feedback did students, instructors, or support staff provide?

- Were NATO Quality Standards better met?

Typical tools used by ETFs:

- Before/after comparison of learning outcomes.

- KPI dashboards (completion rates, assessment scores, number of courses delivered).

- Control charts for exam errors or feedback trends.

- Qualitative feedback analysis.

- Compliance checks against ETFs QA Policy or SOPs.

If results are positive, the improvement can move into the Act phase; if not, the plan is refined and tested again.

4. ACT – Standardize or adjust

If the improvement worked, this is where it becomes formalized.

“Act” activities inside an ETF:

- Updating SOPs, course documentation, lesson plans, and QMS procedures.

- Training instructors and staff on the new process.

Integrating the improvement into:

- Internal QA audits

- Annual QA Reports (AQAR)

- NATO Course Certification and Institutional Accreditation evidence

- Communicating the change to stakeholders

- Monitoring long-term indicators to ensure sustainability.

If the solution did not work, the Act phase may mean adjusting the plan and restarting the PDCA cycle. In Quality Management culture, PDCA is never a one-off. It is a living cycle embedded into everyday practice.

Real examples of PDCA in Education & Individual Training

These ETFs` specific examples illustrate how PDCA works in practice.

Example 1: Improving student orientation and pre-course preparation

Plan: An ETF noticed that many students arrived underprepared, missing required readings or prerequisites. Data: Guest speakers` comments, instructor reports, and delays during Day 1. Improvement idea: Create a short online induction module with mandatory checks.

Do: Pilot the induction module with participants in a single course iteration.

Check: Measure completion rates and compare readiness indicators against previous groups. Instructors reported a noticeable reduction in Day 1 delays.

Act: Integrate the online induction into all courses. Update SOPs, course admin instructions, and communication templates.

Example 2: Reducing assessment errors during practical exercises

Plan: Internal QA reviews identified inconsistencies in how instructors assessed practical tasks. Root cause: Variations in rubric interpretation. Proposal: Create a standardized, clearer rubric with examples of acceptable performance.

Do: Test the new rubric with one instructor team during a practical exercise.

Check: Compare variation in scoring with historical data. Instructor confidence improved and assessment variations decreased.

Act: Roll out the revised rubric ETF-wide. Train all instructors and include the rubric in the QA Policy and subsequently in the accreditation documentation.

Example 3: Enhancing the quality of After-Action Reviews (AARs)

Plan: AARs were producing repetitive, shallow insights. Feedback indicated facilitators lacked structure. The proposed improvement: A new AAR template aligned with learning objectives and performance standards.

Do: Pilot the new AAR structure in one field training exercise.

Check: Review the depth and quality of AAR outputs. Students and instructors reported more actionable findings.

Act: Adopt the new AAR format for all exercises and update the EFT’s QA Policy accordingly.

Example 4: Streamlining Course Administration Processes

Plan: The ETF experienced recurrent delays in issuing course certificates. Root cause analysis: Inconsistent handover between instructors and admin staff. Proposed solution: A step-by-step digital checklist with automatic reminders.

Do: Pilot the checklist during one course.

Check: Certificates were issued 40% faster. Admin errors decreased.

Act: Integrate the digital checklist into all courses and include it in internal QA audits and possibly in the relevant SOPs.

Why PDCA Matters for Quality Assurance in E&IT?

PDCA brings consistency, discipline, and accountability to improvement work across ETFs.

Key benefits for education and training:

- Improved learner experience and satisfaction

- Fewer recurring issues during accreditation or certification

- Higher instructional quality and more consistent delivery

- More reliable assessment processes

- Stronger internal QA culture

- Data-driven decision making

- Alignment with NATO Quality Standards and framework documents.

PDCA enables ETFs to improve confidently, knowing each change is tested, measured, and lesson-informed.

Common pitfalls to avoid

Even well-intentioned improvement efforts fail without discipline. Avoid:

- Jumping straight to solutions without analyzing the problem

- Testing too many changes at once Ignoring data and relying on intuition

- Failing to document results or lessons

- Not updating SOPs after improvements

- Treating PDCA as a one-time fix instead of a continuous loop

- Success depends on evidence, focus, and iterative learning.

Conclusion: A core tool for ETFs` excellence

PDCA cycle remains one of the most effective tools for driving Continuous Improvement Process in the NATO Education & Individual Training landscape. Whether your focus is instructional quality, process efficiency, learner engagement, or compliance with NATO Quality Standards, PDCA provides a structured roadmap to reach higher performance.

For ETFs striving for NATO Course Certification or Institutional Accreditation, or simply aiming to deliver the best possible learning experience, PDCA is not just useful; it is essential. If you want your courses to run smoother, your processes to be more reliable, and your QA culture to be stronger, PDCA is a tool worth applying every day. This means you deliver excellence consistently and not by accident.